Club Log has been a great testing ground and learning tool in the last few years. I’ve picked up a lot of ideas along the way about how to get MySQL to scale (without giving up and going for a Map Reduce solution). This is a fairly technical post aimed at software developers who are using MySQL in a data mining and reporting context. I hope it’s of interest!

Getting Bigger

Around about the time when Club Log reached 30 million QSOs, I could see that having a big MySQL table of log data was going to lead to trouble, since I would have competing reads and writes all aiming for the same data concurrently. This would deadlock the database, even if I used InnoDB, since most of my reports legitimately scan huge ranges of QSO rows. In fact, at an early stage I had this issue and had to slow down the queue which processes users’ uploads to try and compensate.

Today the database is 110 million QSOs (September 2012). Club Log is run on a very limited budget. All costs are paid by donations so I really want to squeeze everything I can out of commodity equipment. Club Log is hosted on a Compaq server with 32GB RAM (mostly given over to a Percona MySQL instance and memcached) and the storage is a speedy RAID 10 array of 4 OCZ solid state disks. That’s the total sum of it.

I had already done the obvious stuff: caching, queuing, batch deletes and batch inserts, partitioning and summary tables were starting to pop up all over the place.

Summary Tables show promise!

The propagation tool in Club Log uses a highly condensed table structure which is populated from the logs, then indexed in a very specific way to accelerate a very specific kind of query. This means you can get propagation graphs between any two countries, with any SFI value, in milliseconds. It’s quite a pleasing outcome since the same report on the raw logs takes minutes.

It’s tempting to think that a summary table exists for any report you can dream of, but I was starting to find this was not the case.

Where Summary Tables stop working

In 2012 I began to support league tables which allow any combination of bands, club or continent filters, mode, QSL and date filters to be generated in real-time by Club Log users. The number of permutations of data this involves far exceeds anything that can be pre-calculated (around 7 million unique league tables).

The biggest challenge is knowing the total number of DXCCs worked within the scope of a filter. Let me give you an example: if I have worked 100 DXCCs on 9 bands, how many have I worked on 5 bands? The answer cannot be discovered without doing a SELECT(DISTINCT()) across those specific bands, and the best summary table would still have to make calculations for every permutation of filter on a per-band basis. That’s way too many summary tables.

When I first released this feature, I found it was fairly slow, but the popularity of being able to filter out bands (eg. 60m) was extremely high. Users had asked me many times about being able to do this kind of customisation. So, the feature was valuable but the computation was rather extreme. My summary tables had excessively large ranges within each partition or index, and there wasn’t really any way to get those numbers into a shorter range. Some queries took 10 seconds (eg. the global master league table had to find everyone’s per band totals before ranking them and displaying them in real time).

Partitions + Filtering through temporary tables to the rescue

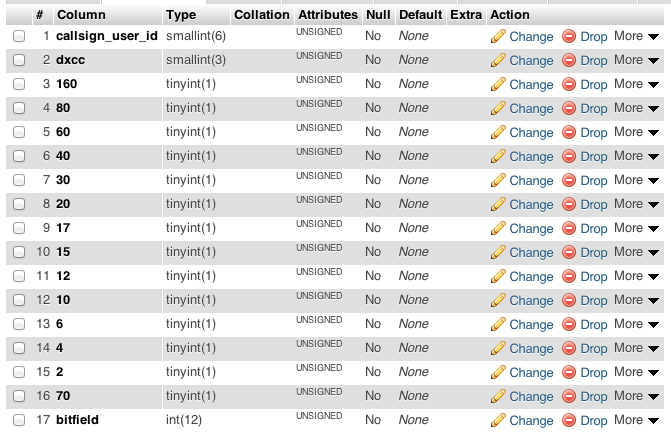

I found another way to represent the data – still in MySQL – which seems to be working. Firstly, I created a new summary table which contained just the DXCC, user and bands as bitfields (on or off), with one binary counter to enumerate the kind of filter.

Now I have a very simple table, and there is one row for each DXCC per user for each permutation of filters, but all the bands are represented separately in a single row. This is still a very, very large table but maybe you can see that it has potential in terms of solving the “how many DXCCs on 5 bands” question.

What is the bitfield? Well, the bitfield flips a bit a when an element of the report filtering changes. For example, if you want a league table based on confirmed QSOs, then the first bit field is flipped. If you want CW only, the next bit is flipped. The outcome is a field which represents a unique permutation of the report filters (there are 48, today).

This field then becomes my table partition. I used a MySQL LIST partition and nominated a separate partition for every value of bitfield. Since all queries are for a specific permutation, this reduces the search space by 1/48 for that specific query, dramatically shortening the table scans involved. All of my SELECTs start with “WHERE bitfield=’n'”.

The remaining problem is that by using columns for each band, I’ve made grouping and counting virtually impossible. All of the power of SQL is lost when trying to perform aggregation against a table structure of this sort.

However, the final part of the puzzle is using temporary tables. I can query the summary table to produce a more flexible, intermediate version of the data in memory. For example, I can start with this temporary table:

CREATE TEMPORARY TABLE ranking SELECT `dxcc`,`callsign_user_id`,(`160`+`80`+`60`+`40`+`30`+`20`+`17`+`15`+`12`+`10`+`6`+`4`+`2`+`70`) as slots FROM `logs_summary` WHERE `bitfield`='[my permutation of report]' GROUP BY `callsign_user_id`,`dxcc`;

Notice that it’s very easy to snip out a band or two. In the real code in Club Log, the whole of this temporary table creation is dynamic, and specific to a particular report. Notice also that the first WHERE filter is against the bitfield – that’s where the table partition cuts the data down immediately to 1/48 of its size.

Once I have the temporary table in memory, I can start to do grouping and aggregation again (and now I’m dealing with a much smaller, purpose-built table of numbers that is friendly towards SQL reporting). For example, I can rank slots:

SELECT callsign_user_id,SUM(`slots`) FROM `ranking` GROUP BY `callsign_user_id`;

or I can rank DXCCs:

SELECT `callsign_user_id`,count(distinct(`dxcc`)) FROM `ranking` GROUP BY `callsign_user_id`;

These queries are order N(1) and trivially fast to compute; so much so that generating a whole league table is now tractable again.

In summary:

- Reduce the log data to a summary table which is expressive enough and partition-friendly enough, even if it is not SQL-friendly

- Generate temporary tables from the summary table

- Perform trivial queries against the temporary table

Caching takes care of removing needless repetition of the processing work involved, so I am not worried about keeping the temporary tables on disk.

I’m not sure many readers will have got this far. If you have, and you have any questions (perhaps I assumed too much prior knowledge of the data structures?) please feel free to ask.